AI Shield to Protect Network from Cyber Threat

The Trend

In an era defined by rapid technological advancement and digital transformation, the landscape of cybersecurity is undergoing fundamental change. As cyber threats increase, enterprises face mounting challenges in defending their assets against an ever-expanding array of attacks. High-profile data breaches, coupled with a global shortage of skilled cybersecurity professionals, underscore the urgent need for innovative solutions capable of safeguarding sensitive data and critical infrastructure. Against this backdrop, the convergence of artificial intelligence (AI) and cybersecurity emerges, promising to revolutionize the way to detect, respond to, and mitigate cyber threats.

The surge in requests for implementing AI algorithms into cybersecurity is driven by several compelling trends. From the constant attacks of advanced cyber threats to the pressing need for regulatory compliance, IT personnel worldwide are seeking intelligent and adaptive security solutions capable of keeping pace with the evolving threat landscape. Furthermore, the integration of AI into security operations empowers organizations to automate routine tasks and achieve greater operational efficiency.

The Challenge

As companies start their journey of implementing AI cybersecurity hardware, they encounter countless struggles that demand innovative solutions and strategic approaches. The primary obstacle is the complexity of integrating AI hardware into existing IT infrastructure seamlessly. IT professionals must navigate compatibility issues, interoperability concerns, and the need for seamless integration with established security systems. Additionally, the resource-intensive nature of AI cybersecurity requires careful consideration of computational resources, memory allocation, and storage capacity to ensure optimal performance and scalability.

Moreover, the sensitive nature of data processed by AI cybersecurity hardware underscores the critical importance of privacy and security. IT professionals face the tough task of safeguarding sensitive data against breaches, unauthorized access, and compliance violations while harnessing the power of AI for threat detection and mitigation. Balancing the need for robust data protection measures with using data effectively for AI-driven insights is a delicate challenge, requiring the implementation of rigorous encryption and access control techniques.

NEXCOM Solution

NEXCOM offers a solution to empower organizations to explore the potential of AI-driven cybersecurity to fortify network defense, protect digital assets, and secure a safer future in the digital age.

NEXCOM's NSA 7160R-based cybersecurity solution addresses the multifaceted challenges in implementing AI hardware in cybersecurity operations. Leveraging a modular design and sharing the same form factor with the previous generation of its product family, NEXCOM's solution mitigates integration complexity by seamlessly integrating with existing IT infrastructure, minimizing compatibility issues.

Furthermore, NSA 7160R is designed with scalability in mind, enabling companies to navigate resource constraints effectively by dynamically allocating computational resources, optimizing memory usage, and scaling storage capacity to meet evolving operational demands. Customers can choose different DDR5 speeds based on their budget and requirements. A flexible configuration of LAN modules enables up to 2.6TB Ethernet connectivity per system or allows up to 128GB of additional storage through storage adaptors.

By prioritizing performance optimization, NEXCOM's solution enables enterprises to achieve superior detection accuracy, response times, and scalability, delivering actionable insights and proactive threat mitigation capabilities to safeguard against emerging cyber threats effectively. NSA 7160R supports the latest dual 5th Gen Intel® Xeon® Scalable processors and is backward compatible with 4th Gen Intel®Xeon® Scalable processors, allowing customers to scale up both in CPU core count and processor generation.

In addressing the critical concerns of data privacy and security, NEXCOM's solution implements hardware-based robust encryption protocols, ensuring the confidentiality, integrity, and availability of sensitive information processed by AI. A series of various accelerators include Intel® Crypto Acceleration, Intel® QuickAssist Software Acceleration, Intel® Data Streaming Accelerator (DSA), Intel® Deep Learning Boost (Intel® DL Boost), Intel® Advanced Matrix Extensions (AMX), and more. [1]The set of accelerators may vary depending on selected processor SKU.

NSA 7160R empowers IT personnel to proceed with deployments confidently. To validate its efficacy in AI cybersecurity, NEXCOM conducted a series of tests comparing two configurations powered by dual 4th Gen Intel® Xeon® Scalable processor (DUT 1) and dual 5th Gen Intel® Xeon® Scalable processor (DUT 2). CPU SKUs’ chosen for the testing are correlated by performance and core count for fair and unbiased comparison. The rest of the configurations were of utmost equivalence. Detailed test configuration is shown in TABLE I.

For the tests, two open-source security AI models were chosen: MalConv and BERT-base-cased.

TABLE I

DUT 1 AND DUT 2 TEST CONFIGURATIONS

|

Item

|

DUT1

|

DUT2

|

|

4th Gen Intel® Xeon®-based

|

5th Gen Intel® Xeon®-based

|

|

|

CPU

|

2 x Intel® Xeon® Gold 6430 processors

|

2 x Intel® Xeon® Gold 6530 processors

|

|

Memory

|

252GB

16 (8+8) x 32G DDR5 4800 RDIMMs |

|

|

SSD

|

512GB

1 x 2.5" SSD SATA III |

|

|

Storage

|

1.2TB

4 x M.2 2280 PCIe4 ×4 4TB NVMe modules in slot 2 |

|

|

Ubuntu

|

22.04

|

|

|

Kernel

|

v5.19

|

|

Test Results for MalConv AI Model

MalConv (Malware Convolutional Neural Network) is an deep learning-based approach used in cybersecurity for the purpose of malware detection.

|

While traditional malware detection methods rely on signatures or behavior analysis, vulnerable to circumvention by polymorphic or unseen variants, MalConv utilizes convolutional neural networks (CNNs) to directly analyze executable file binary data. Trained on both malicious and benign files, MalConv learns to distinguish between them based on binary data patterns. This enables MalConv to detect polymorphic or unseen malware variants by identifying malicious characteristics within the binary code itself, bypassing reliance on signatures or behavior analysis.

|

Latency and throughput in the MalConvn AI model were tested on both DUTs. Latency and throughput in MalConv testing provide valuable insights into its performance, responsiveness, scalability, and efficiency in AI cybersecurity applications. Latency measurement helps determine the time taken by MalConv to analyze an input file and provide a classification (malicious or benign), while throughput measurement evaluates the ability of MalConv to process multiple files or data streams simultaneously within a given time frame.

The results of latency and throughput MalConv tests for different opt methods are shown in TABLE II.

TABLE II

MALCONV AI MODEL TEST RESULTS FOR LATENCY AND THROUGHPUT

|

Framework

|

Opt Method

|

Model

|

Platform

|

Latency

(ms) |

Throughput

(samples/second)/(FPS) |

|

tensorflow 2.15.0

|

INC 2.2

|

Malconv.inc.int8.pb

|

DUT 1

|

12.15

|

82.3

|

|

Malconv.inc.int8.pb

|

DUT 2

|

11.18

|

89.47

|

||

|

onnxruntime 1.16.3

|

INC 2.2

|

Malconv.inc.int8.onnx

|

DUT 1

|

16.55

|

60.43

|

|

Malconv.inc.int8.onnx

|

DUT 2

|

14.47

|

69.1

|

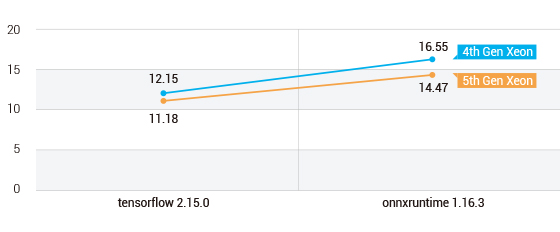

Based on the achieved results we can conclude that 5th Gen Xeon based server shows better results in both opt methods and both test items (latency and throughput).

Lower latency is essential for real-time threat detection, enabling rapid response to security incidents.

- 5th Gen Xeon DUT shows 8% lower latency in tensorflow 2.15.0 framework by spending 0.97ms less than 4th Gen Xeon DUT.

- 5th Gen Xeon DUT shows 13% lower latency in onnxruntime 1.16.3 framework by spending 2.08ms less than 4th Gen Xeon DUT.

Figure 1. MalConv AI model test results for latency

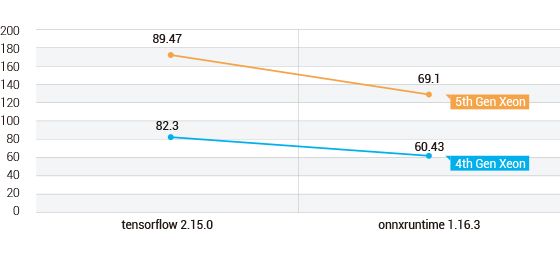

Higher throughput indicates greater volume-handling capacity, which is essential for analyzing large datasets efficiently.

- 5th Gen Xeon DUT shows 9% higher throughput in tensorflow 2.15.0 framework by analyzing 7.17 more samples per second than 4th Gen Xeon DUT.

- 5th Gen Xeon DUT shows 14% higher throughput in onnxruntime 1.16.3 framework by analyzing 8.67 more samples per second than 4th Gen Xeon DUT.

Figure 2. MalConv AI model test results for throughput

Test Results for BERT-base-cased AI Model

BERT (Bidirectional Encoder Representations from Transformers) is a powerful natural language processing model developed by Google. The "base" version refers to the smaller and computationally less expensive variant of BERT compared to its larger counterparts like BERT-large. The "cased" variant retains the original casing of the input text, preserving capitalization information.

|

In AI cybersecurity, BERT-base-cased offers a versatile framework for natural language understanding in cybersecurity applications. This model can be utilized for various tasks such as threat intelligence analysis, email and message classification, malicious URL detection, incident response and threat hunting, and more.

|

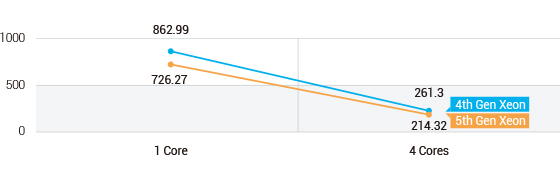

During the tests static, dynamic and FP23 BERT-base-cased model latencies of each DUT were analyzed. The tests were conducted using 1 and 4 active cores to determine if there would be any improvement with increased core involvement. The results are shown in TABLE III.

Static model latency refers to the time it takes for the pre-trained Bert-base-cased model to process input data and make predictions without further adaptation. Dynamic model latency measures the time required for Bert-base-cased to adapt or fine-tune itself during runtime based on evolving threat conditions or changes in the operating environment. FP23 model latency represents the latency of Bert-base-cased when configured to maintain a specific false positive rate of 23%. Minimizing FP23 model latency allows security teams to respond more quickly to security incidents, reducing the time and resources required for investigation and mitigation.

TABLE III

BERT-BASE-CASED AI MODEL TEST RESULTS FOR STATIC, DYNAMIC AND FP23 LATENCIES

|

Framework

|

Opt Method

|

Core Used for Test

|

Platform

|

Static qat

model Latency (ms) |

Dynamic qat model Latency(ms)

|

FP32

model Latency (ms) |

|

Pytorch 2.1.0

|

IPEX 2.1.100

|

1 Core

|

DUT 1

|

97.5

|

472.46

|

862.99

|

|

DUT 2

|

86.28

|

327.53

|

726.27

|

|||

|

4 Cores

|

DUT 1

|

29.84

|

118.94

|

261.3

|

||

|

DUT 2

|

25.08

|

98.78

|

214.32

|

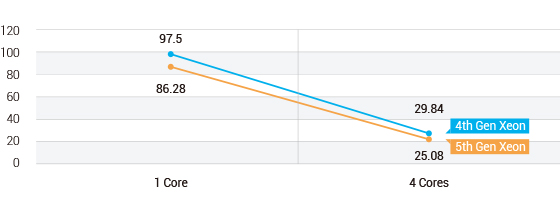

Based on the achieved results we can conclude that 5th Gen Xeon based server shows better results in all 3 test items (static, dynamic and FP23 BERT-base-cased model latencies) and both test setups for CPU resource allocations (1 and 4 cores).

Lower static model latency is desirable for real-time threat detection, enabling rapid analysis of text data such as security alerts, email content, or chat messages. Longer latency may introduce delays in processing, affecting the responsiveness of security operations and hindering timely threat mitigation efforts.

- 5th Gen Xeon DUT shows 12% lower latency in 1 core scenario by spending 11.22ms less than 4th Gen Xeon DUT.

- 5th Gen Xeon DUT shows 16% lower latency in 4 cores scenario by spending 4.76ms less than 4th Gen Xeon DUT.

Figure 3. BERT-base-cased AI Model Test Results for Static Latency

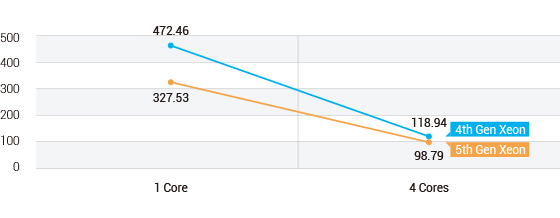

Lower dynamic model latency enables the model to respond more quickly to emerging threats and shifting attack patterns, enhancing its effectiveness in cybersecurity operations.

- 5th Gen Xeon DUT shows 31% lower latency in 1 core scenario by spending 144.93ms less than 4th Gen Xeon DUT.

- 5th Gen Xeon DUT shows 17% lower latency in 4 cores scenario by spending 20.16ms less than 4th Gen Xeon DUT.

Figure 4. BERT-base-cased AI Model Test Results for Dynamic Latency

Achieving lower FP23 model latency is essential for minimizing false positives while maintaining high detection accuracy. This ensures that security teams can focus their efforts on genuine threats without being inundated by false alarms.

- 5th Gen Xeon DUT shows 16% lower latency in 1 core scenario by spending 136.72ms less than 4th Gen Xeon DUT.

- 5th Gen Xeon DUT shows 18% lower latency in 4 cores scenario by spending 46.98ms less than 4th Gen Xeon DUT.

Figure 5. BERT-base-cased AI Model Test Results for FP23 Latency

Test Summary

Both devices successfully executed AI security software, with the platform utilizing the 5th Gen Intel® Xeon® Scalable processor showcasing superior performance over the server employing the 4th Gen Intel® Xeon® Scalable processor. Both platforms demonstrated efficiency in latency and throughput for security-related tasks, and proved ready for AI cybersecurity.

Conclusion

As the cybersecurity landscape continues to evolve, IT personnel must remain proactive in adapting to emerging threats and leveraging the latest advancements in AI technology. Integrating AI algorithms, such as MalConv and Bert-base-cased, into cybersecurity operations represents a significant advancement in the fight against cyber threats.

NEXCOM’s NSA 7160R servers offer enhanced threat detection, rapid response times, and improved operational efficiency, addressing the ever-evolving challenges faced by enterprises in safeguarding their digital assets. As both tested platforms demonstrate their significant contribution to addressing cybersecurity workloads, the decision on which platform to choose ultimately rests with the customer, who can select based on their specific requirements and the performance achieved.